Today I will go over how to extract the named entities in two different ways, using popular NLP libraries in Python.

What are named entities?

I'm working with fashion articles, so I will start with some fashion-related examples of named entities:

- Anna Wintour - person's name

- Gucci - brand name

- Paris - location

- Vogue Magazine - brand/organization

Named entities can refer to people names, brands, organization names, location names, even things like monetary units, among others.

Basically, anything that has a proper name can be a named entity.

Named Entity Recognition

Named Entity Recognition, or NER, is a type of information extraction that is widely used in Natural Language Processing, or NLP, that aims to extract named entities from unstructured text.

Unstructured text could be any piece of text from a longer article to a short Tweet.

Named entity recognition can be helpful when trying to answer questions like...

- Which brands are the most popular?

- Who are the biggest influencers in fashion?

- What are the hottest fashion items people are talking about?

There are several different libraries that we can use to extract named entities, and we will look at a couple of them in this post.

- The Stanford NER tagger with the Natural Language Toolkit(NLTK)

- Spacy

Stanford NER + NLTK

We will use the Named Entity Recognition tagger from Stanford, along with NLTK, which provides a wrapper class for the Stanford NER tagger.

The Stanford NER tagger is written in Java, and the NLTK wrapper class allows us to access it in Python.

You can see the full code for this example here.

Download Stanford NER

The Stanford NER tagger is written in Java, so you will need Java installed on your machine in order to run it.

You can read more about Stanford NER here.

Download the software at nlp.stanford.edu.

This includes the jar file for the NER tagger, as well as pre-trained models that will be used to label the text with named entities.

I'm using the English 3 class model which has Location, Person and Organization entities. You can read more about the models here.

Note the file paths to the jar file and the model. We will need them in the code.

NLTK - Natural Language Toolkit

NLTK is a collection of libraries written in Python for performing NLP analysis.

There is a great book/tutorial on the website as well to learn about many NLP concepts, as well as how to use NLTK.

As I mentioned before, NLTK has a Python wrapper class for the Stanford NER tagger.

Install NLTK

First let's create a virtual environment for this project.

mkvirtualenv ner-analysis

and install NLTK

In a new file, import NLTK and add the file paths for the Stanford NER jar file and the model from above.

import nltk

from nltk.tag.stanford import StanfordNERTagger

PATH_TO_JAR='/Users/christina/Projects/stanford_nlp/stanford-ner/stanford-ner.jar'

PATH_TO_MODEL = '/Users/christina/Projects/stanford_nlp/stanford-ner/classifiers/english.all.3class.distsim.crf.ser.gz'

I also imported the StanfordNERTagger, which is the Python wrapper class in NLTK for the Stanford NER tagger.

Next, initialize the tagger with the jar file path and the model file path.

tagger = StanfordNERTagger(model_filename=PATH_TO_MODEL,path_to_jar=PATH_TO_JAR, encoding='utf-8')

Demo sentence

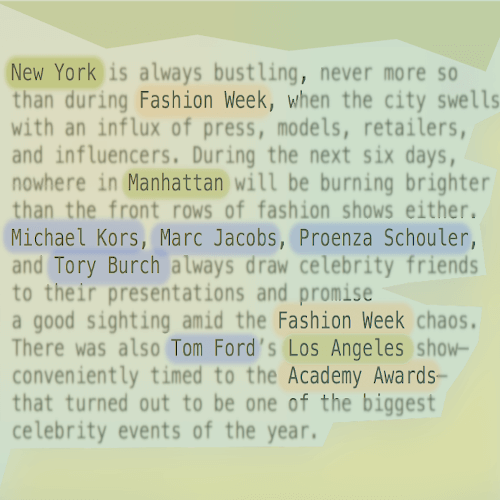

sentence = "First up in London will be Riccardo Tisci, onetime Givenchy darling, favorite of Kardashian-Jenners everywhere, who returns to the catwalk with men’s and women’s wear after a year and a half away, this time to reimagine Burberry after the departure of Christopher Bailey."

I took this sentence from a New York Times article to use for the demo.

Split the sentence into words with NLTK's word tokenizer. Each word is a token.

words = nltk.word_tokenize(sentence)

Then put the tokenized sentence through the tagger.

tagged = tagger.tag(words)

And the output will be a list of tuples of the token and its named entity tag.

[('First', 'O'),

('up', 'O'),

('in', 'O'),

('London', 'LOCATION'),

('will', 'O'),

('be', 'O'),

('Riccardo', 'PERSON'),

('Tisci', 'PERSON'),

(',', 'O'),

('onetime', 'O'),

('Givenchy', 'ORGANIZATION'),

('darling', 'O'),

(',', 'O'),

('favorite', 'O'),

('of', 'O'),

('Kardashian-Jenners', 'O'),

('everywhere', 'O'),

(',', 'O'),

('who', 'O'),

('returns', 'O'),

('to', 'O'),

('the', 'O'),

('catwalk', 'O'),

('with', 'O'),

('men', 'O'),

('’', 'O'),

('s', 'O'),

('and', 'O'),

('women', 'O'),

('’', 'O'),

('s', 'O'),

('wear', 'O'),

('after', 'O'),

('a', 'O'),

('year', 'O'),

('and', 'O'),

('a', 'O'),

('half', 'O'),

('away', 'O'),

(',', 'O'),

('this', 'O'),

('time', 'O'),

('to', 'O'),

('reimagine', 'O'),

('Burberry', 'O'),

('after', 'O'),

('the', 'O'),

('departure', 'O'),

('of', 'O'),

('Christopher', 'PERSON'),

('Bailey', 'PERSON'),

('.', 'O')]

It's not perfect - note that 'Burberry' was not tagged, along with 'Kardashian-Jenners'.

But overall, not bad.

The O tag is just a background tag for words that did not fit any of the named entity category labels.

Spacy

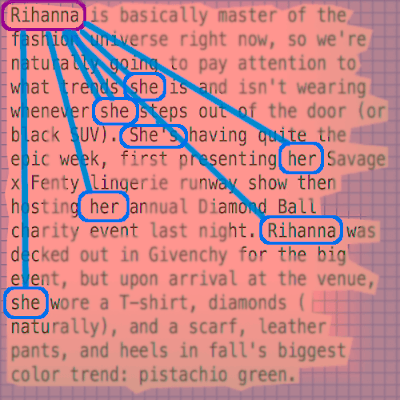

Let's try tagging the same sentence with Spacy.

Spacy is another NLP library that is written in Cython.

It is pretty popular and easy to work with, which you will see in a minute.

Install Spacy

First we need to download Spacy, as well as the English model we will use.

Model

We will download the English model en_core_web_sm - this is the default English model. Spacy has other models as well.

Spacy models can be installed as Python packages and included as a dependency in your requirements.txt file. Read more about that in the docs.

python -m spacy download en_core_web_sm

Using the same demo sentence as in the earlier example, we can extract the named entities in just a couple lines of code with Spacy.

import spacy

sentence = "First up in London will be Riccardo Tisci, onetime Givenchy darling, favorite of Kardashian-Jenners everywhere, who returns to the catwalk with men’s and women’s wear after a year and a half away, this time to reimagine Burberry after the departure of Christopher Bailey."

nlp = spacy.load('en_core_web_sm')

doc = nlp(sentence)

The first step is to load the model into the nlp variable.

Then call nlp on the text, which initiates a number of steps, first tokenizing the document and then starting the processing pipeline which processes the document with a tagger, a parser, and an entity recognizer.

Now we can loop through the named entities.

for ent in doc.ents:

print(ent.text,ent.label_)

Here is the output:

First ORDINAL

London GPE

Riccardo Tisci PERSON

Givenchy GPE

Kardashian-Jenners ORG

a year and a half DATE

Burberry PERSON

Christopher Bailey PERSON

Spacy extracted both 'Kardashian-Jenners' and 'Burberry', so that's great.

Training Custom Models

If an out-of-the-box NER tagger does not quite give you the results you were looking for, do not fret!

With both Stanford NER and Spacy, you can train your own custom models for Named Entity Recognition, using your own data.

If the data you are trying to tag with named entities is not very similar to the data used to train the models in Stanford or Spacy's NER tagger, then you might have better luck training a model with your own data.

At the end of the day, these models are simply making calculations to predict which NER tag fits a word in the text data you feed it, which is why if your text data is too different than what the tagger you're using was originally trained on, it might not recognize some of the named entities in your text.

Too different is subjective, so it's up to you to decide whether these out-of-the-box taggers meet your needs!

Resources for training custom NER models

Training a custom NER model with Stanford NER.

Training a custom NER model with Spacy.

Thanks for reading!

As always, if you have any questions or comments, write them below or reach out to me on Twitter @LVNGD.