March 22, 2020

Text Normalization for Natural Language Processing in Python

Text preprocessing is an important part of Natural Language Processing (NLP), and normalization of text is one step of preprocessing.

The goal of normalizing text is to group related tokens together, where tokens are usually the words in the text.

Depending on the text you are working with and the type of analysis you are doing, you might not need all of the normalization techniques in this post.

Normalization Techniques

In this post we will go over some of the common ways to normalize text.

- Tokenization

- Removing stopwords

- Handling whitespace

- Converting text to lowercase

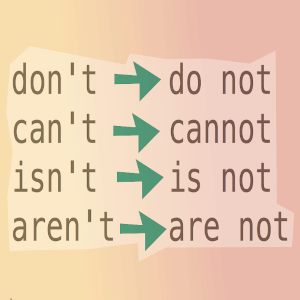

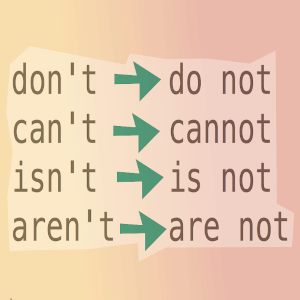

- Expanding contractions (don't -> do not)

- Handling unicode characters - accented letters and some punctuation

- Number words -> numeric

- Stemming and/or Lemmatization

- British vs. American English

Setup

We're going to use the Natural Language Toolkit (NLTK), as well as a few other packages that I will go over in the rest of the post.

First create a virtual environment for the project and install the packages we will be using.

pip install nltk

pip install unidecode

pip install pycontractions

pip install word2number

These are the modules we will use in this post - go ahead and create a new file and import them.

import re

import string

import unidecode

from nltk.tokenize import sent_tokenize

from nltk.tokenize import word_tokenize

from nltk.stem import WordNetLemmatizer

from nltk.stem.porter import PorterStemmer

from nltk.corpus import stopwords

import gensim.downloader as api

from pycontractions import Contractions

from word2number import w2n

For the examples in this post, I'm using text from this article in Vogue magazine.

text = "“Everything we’re doing is about going forward,” Phoebe Philo told Vogue in 2009, shortly before showing her debut Resort collection for Céline. Although the label had garnered headlines when it was revived by Michael Kors in the late ’90s, it was Philo who truly brought the till then somewhat somnambulant luxury house to the forefront. Critics credited her with pushing fashion in a new direction, toward a more spare, stripped-down kind of sophistication. What Céline now offered women was, as the magazine put it, “a grown-up and hip way to put themselves together.”"

Tokenization

Tokenization means splitting up strings of text into smaller pieces.

NLTK has a sentence tokenizer, as well as a word tokenizer.

First let's look at the sentence tokenizer.

You may have guessed that the sentence tokenizer will split a paragraph into sentences.

sentences = sent_tokenize(text)

print(sentences)

['“Everything we’re doing is about going forward,” Phoebe Philo told Vogue in 2009, shortly before showing her debut Resort collection for Céline.',

'Although the label had garnered headlines when it was revived by Michael Kors in the late ’90s, it was Philo who truly brought the till then somewhat somnambulant luxury house to the forefront.',

'Critics credited her with pushing fashion in a new direction, toward a more spare, stripped-down kind of sophistication.',

'What Céline now offered women was, as the magazine put it, “a grown-up and hip way to put themselves together.”']

The result here is a list of four sentences.

Now let's use the word tokenizer to split the first sentence into word tokens.

test_sentence = sentences[0]

words = word_tokenize(test_sentence)

print(words)

['“',

'Everything',

'we',

'’',

're',

'doing',

'is',

'about',

'going',

'forward',

',',

'”',

'Phoebe',

'Philo',

'told',

'Vogue',

'in',

'2009',

',',

'shortly',

'before',

'showing',

'her',

'debut',

'Resort',

'collection',

'for',

'Céline',

'.']

If you're a Python developer you've probably used the split() operation to split up a string of text into words.

words = test_sentence.split()

print(words)

['“Everything',

'we’re',

'doing',

'is',

'about',

'going',

'forward,”',

'Phoebe',

'Philo',

'told',

'Vogue',

'in',

'2009,',

'shortly',

'before',

'showing',

'her',

'debut',

'Resort',

'collection',

'for',

'Céline.']

Can you spot the difference between the result using split() and using the NLTK tokenizer?

Using split(), the string is broken up in words based on whitespace, and the punctuation is grouped in with the words instead of broken up as its own token.

Here, the token 'Céline.' - with the period on the end - is considered as a separate token than 'Céline' without it, but we want these tokens to be grouped together in any analysis because they refer to the same entity.

This could mess up your results in ways you might not immediately notice.

Removing stopwords

Stopwords are common words such as 'the' or 'a' in a language that might not be useful features when building NLP models.

Here I'm using a list of English stopwords from NLTK.

stop_words = set(stopwords.words('english'))

filtered = [word for word in word_tokenize(test_sentence) if word not in stop_words]

print(filtered)

['“', 'Everything', 'going', 'forward', '”', 'Phoebe', 'Philo', 'told', 'Vogue', '2009', 'shortly', 'showing', 'debut', 'Resort', 'collection', 'Céline', '.']

Whitespace

You can use the strip() method on strings in Python to remove leading and trailing whitespace.

But sometimes raw text data can look pretty awful in the middle as well.

Maybe you've scraped data from the internet and it came from a website with clunky design where the developers used extra spaces to position text on the page.

The text sample I've been using didn't have any obvious problems with extra spaces, but if it did it might look like this:

test_sentence = '“Everything we’re doing is about going forward,” Phoebe Philo told Vogue in 2009, shortly before showing her debut Resort collection for Céline.'

One way to remove the extra whitespaces is with a regular expression to replace any group of more than one space in the text string with a single space.

test_sentence = re.sub(' +',' ', test_sentence)

print(test_sentence)

“Everything we’re doing is about going forward,” Phoebe Philo told Vogue in 2009, shortly before showing her debut Resort collection for Céline.

Converting to lowercase

This is probably an obvious one, but converting everything to lowercase is an easy way to standardize text data.

test_sentence = test_sentence.lower()

print(test_sentence)

“everything we’re doing is about going forward” phoebe philo told vogue in 2009 shortly before showing her debut resort collection for céline

Expanding contractions

One way to do this is with regular expressions.

For example in this sentence, we would want to expand we're.

pattern = r'we[\’\']re'

replacement = 'we are'

test_sentence = re.sub(pattern,replacement,test_sentence)

print(test_sentence)

“Everything we are doing is about going forward” Phoebe Philo told Vogue in 2009 shortly before showing her debut Resort collection for Céline.

That worked for this sentence, but you will likely have many other contractions, so you would need to write a regular expression for all of them.

There are also a lot of cases that require contextual knowledge of the contraction to pick the right expansion.

There is a Python library pycontractions that uses semantic vector models such as Word2Vec, GloVe, FastText, or others, to determine the correct expansion.

#downloads the model we will use

model = api.load("glove-twitter-25")

cont = Contractions(kv_model=model)

text = list(cont.expand_texts([test_sentence],precise=True))

print(text)

['“Everything we are doing is about going forward” Phoebe Philo told Vogue in 2009 shortly before showing her debut Resort collection for Céline.']

Removing punctuation

Removing punctuation can be done with the built-in string module in Python.

punctuation_table = str.maketrans('','',string.punctuation)

test_sentence = test_sentence.translate(punctuation_table)

print(test_sentence)

“Everything we’re doing is about going forward” Phoebe Philo told Vogue in 2009 shortly before showing her debut Resort collection for Céline

Notice that in this sentence, all of the punctuation has not been removed.

string.punctuation

'!"#$%&\'()*+,-./:;<=>?@[\\]^_`{|}~'

These are the punctuation marks that should have been removed.

The period at the end was removed, but not the quotation marks.

Why?

The quotation marks in this sentence are unicode characters, which we will deal with next.

Accented characters and other unicode issues

In addition to the quotation marks, there are accented characters in Céline that are also unicode characters.

Céline and Celine would be considered separate tokens, and it is written both ways in different fashion articles, even though they are all referring to the same designer.

Removing accents helps to normalize the words in your text data.

With the punctuation that didn't get removed before, we were trying to remove something that did not exist!

In this sentence, the “ mark is not the same as the " mark, which was in string.punctuation.

'"' in test_sentence

False

We can strip all of these unicode characters from the sentence with the unidecode module.

The module takes a unicode string and tries to represent it in ASCII characters. Read more here.

test_sentence = unidecode.unidecode(test_sentence)

print(test_sentence)

"Everything we're doing is about going forward," Phoebe Philo told Vogue in 2009, shortly before showing her debut Resort collection for Celine.

And check the quotation marks.

'"' in test_sentence

True

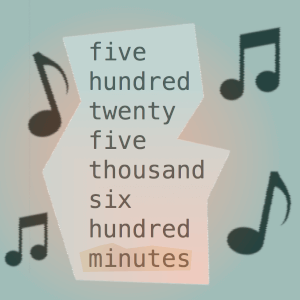

Convert number words to numeric

You might want to convert numbers from words to numeric.

Or you might want to remove them altogether.

It depends on your project and goals.

Earlier we imported a Python package, word2number, that will convert number words to numbers.

text = "five hundred twenty five thousand six hundred"

print(w2n.word_to_num(text))

525600

Stemming and Lemmatization

Lemmatization converts a word to its base form, removing grammatical inflection.

We imported the WordNetLemmatizer from NLTK earlier, but there are several options for lemmatizers in NLTK.

lemmatizer = WordNetLemmatizer

d = lemmatizer.lemmatize('dresses')

print(d)

'dress'

The lemmatizer takes a word, and an optional part of speech - if you do not specify the part of speech, the default is noun.

Stemming is similar to lemmatization, but it mainly chops off a prefix or suffix, while the lemmatizer takes into account parts of speech and is more sophisticated in determining the base form of a word.

stemmer = PorterStemmer()

d = stemmer.stem('dresses')

print(d)

'dress'

An example where you can see the difference is the word 'better', which is an adjective, so pass pos='a' to the lemmatizer.

better_lemmatized = lemmatizer.lemmatize('better', pos='a')

better_stemmed = stemmer.stem('better')

print(better_lemmatized)

'good'

print(better_stemmed)

'better'

The lemmatizer returns the actual root of this word, 'good'.

You can find the NLTK docs for lemmatizing and stemming here.

British vs. American English

One other thing to consider is if your text has a mix of different types of English.

I haven't found a package for converting these from one type to the other, but there is a comprehensive list of spelling differences.

You could make a dictionary out of these to convert words in your text.

Putting it all together

I've put most of these techniques together in a class to quickly demonstrate, which you can find here.

First it operates on the entire text block

- removes extra whitespace within text

- converts unicode to ascii

- converts to lowercase

- expands contractions

- tokenizes sentences

Then on each sentence

- tokenizes words

- removes punctuation

- remove leading or trailing whitespace

- lemmatizes words

- removes stopwords

You could also just do the operations on the whole text block, but sometimes you might want to keep sentences separate.

normalized = TextNormalizer().normalize_text(text)

for sentence in normalized:

print(sentence)

everything going forward phoebe philo told vogue 2009 shortly showing debut resort collection celine

although label garnered headline revived michael kor late 90 philo truly brought till somewhat somnambulant luxury house forefront

critic credited pushing fashion new direction toward spare strippeddown kind sophistication

celine offered woman magazine put grownup hip way put together

The one issue here is that Michael Kors' name was changed with the lemmatization to Kor.

So it's always important to check your data as you process it so that you can make adjustments.

Lemmatization might not even be necessary, depending on your project.

You could try training your NLP model without doing that type of processing and see how it performs.

Thanks for reading!

If you have any questions or comments, write them below or reach out on Twitter @LVNGD.