I'm going to look at noise complaints from the 311 complaints dataset from NYC Open Data.

Some of the features in this data:

- The dates when the complaint was opened and closed.

- The complaint status - whether the complaint is open or has been closed.

- The complaint type - I'm looking at noise complaints, but there are different types of noise, such as noise from a party, vehicle noise, construction noise, and so on.

- Further description of the complaint.

- The responding agency - the police and other departments respond to different types of complaints.

- Location information, including latitude and longitude coordinates.

- Action taken.

There are different things you could try to predict from this data.

- How long it will take to close out a complaint.

- What the outcome will be or what action, if any, will be taken.

Setup

The first thing to do is to get an API key from NYC Open Data - read this post for how to do that.

Next, create a virtual environment and install the packages we will be working with.

pip install requests

pip install pandas

pip install scikit-learn

I have a script to fetch the 311 data in a gist, which you can find here.

If you run that script, you will have a dataframe noise_complaints which we will be using in the rest of the post.

The script does the following:

- Fetch 50,000 noise complaints that were opened before January 15, 2020 - I did this because most of these complaints will have been closed out by now, the end of February.

- Drop some columns that are irrelevant to noise complaints, as well as some columns related to location information such as street names because I'm not going to use those as features.

- Filter the complaints to only have ones with a status of 'Closed'.

noise_complaints.shape

(49867, 11)

So we have 49,867 rows and 11 columns.

Look at the column names to see the features we currently have.

noise_complaints.columns

Index(['agency_name', 'borough', 'closed_date', 'complaint_type',

'created_date', 'descriptor', 'incident_address', 'incident_zip',

'latitude', 'longitude', 'resolution_description'],

dtype='object')

Missing Data

First, check for missing values in the dataset.

I wrote about missing values in another post on cleaning data with Pandas.

noise_complaints.isnull().sum()

agency_name 0

borough 0

closed_date 0

complaint_type 0

created_date 0

descriptor 0

incident_zip 57

location_type 8613

resolution_description 356

dtype: int64

I'm going to drop the incident_address, latitude and longitude columns, because I'm not going to do anything with them, but I will talk about latitude and longitude a bit later in the post.

noise_complaints.drop(['incident_address', 'latitude','longitude'], inplace=True,axis=1)

The missing values we need to deal with are the location_type, the zip codes incident_zip, and resolution_description.

Location type

I'm going to fill in the location_type based on the complaint_type and descriptor features, which have some information about the location type.

noise_complaints.location_type.unique()

array([nan, 'Above Address', 'Residential Building/House',

'Street/Sidewalk', 'Park/Playground', 'Club/Bar/Restaurant',

'House of Worship', 'Store/Commercial'], dtype=object)

There are values like 'Street/Sidewalk' or 'Residential Building/House', which are similar to values found in the complaint_type and descriptor columns, which you can see below.

noise_complaints.complaint_type.unique()

array(['Noise', 'Noise - Helicopter', 'Noise - Residential',

'Noise - Street/Sidewalk', 'Noise - Vehicle', 'Noise - Park',

'Noise - Commercial', 'Noise - House of Worship'], dtype=object)

'Noise - Residential' can be matched up with the 'Residential Building/House' location type, for example.

There is also 'Noise - Street/Sidewalk', which can be matched up to the 'Street/Sidewalk' location type.

Here are the unique values in the descriptor column.

noise_complaints.descriptor.unique()

array(['Noise, Barking Dog (NR5)',

'Noise: Construction Before/After Hours (NM1)', 'Other',

'Banging/Pounding', 'Loud Music/Party', 'Loud Talking',

'Noise: Construction Equipment (NC1)', 'Engine Idling',

'Noise, Ice Cream Truck (NR4)',

'Noise: air condition/ventilation equipment (NV1)',

'Noise: lawn care equipment (NCL)', 'Noise: Alarms (NR3)',

'Loud Television', 'Car/Truck Horn', 'Car/Truck Music',

'Noise, Other Animals (NR6)', 'Noise: Jack Hammering (NC2)',

'Noise: Private Carting Noise (NQ1)',

'Noise: Boat(Engine,Music,Etc) (NR10)', 'NYPD', 'News Gathering',

'Noise: Other Noise Sources (Use Comments) (NZZ)',

'Noise: Manufacturing Noise (NK1)',

'Noise: Air Condition/Ventilation Equip, Commercial (NJ2)',

'Noise: Loud Music/Daytime (Mark Date And Time) (NN1)',

'Horn Honking Sign Requested (NR9)'], dtype=object)

After matching up as much as I can, I'm going to add a new category 'Other' for any location types that do not fit in one of the current categories.

I put all of this into the function below that I will then use with the DataFrame.apply() function in Pandas, which allows us to specify a function that will be called on each row of the data. docs

def fill_in_location_type(row):

complaint_type = row['complaint_type']

if complaint_type == 'Noise - Residential':

return 'Residential Building/House'

elif complaint_type == 'Noise - Street/Sidewalk':

return 'Street/Sidewalk'

elif complaint_type == 'Noise - Vehicle':

return 'Street/Sidewalk'

elif complaint_type == 'Noise - Park':

return 'Park/Playground'

elif complaint_type == 'Noise - Commercial':

return 'Store/Commercial'

elif complaint_type == 'Noise':

descriptor = row['descriptor']

if descriptor in ['Loud Music/Party', 'Loud Television', 'Banging/Pounding', 'Loud Talking']:

return 'Residential Building/House'

elif descriptor in ['Noise: Jack Hammering (NC2)','Noise: Private Carting Noise (NQ1)', 'Noise: Construction Before/After Hours (NM1)','Noise: lawn care equipment (NCL)','Noise: Jack Hammering','Car/Truck Music', 'Noise: Alarms (NR3)', 'Engine Idling','Car/Truck Horn','Noise, Ice Cream Truck (NR4)','Horn Honking Sign Requested (NR9)','Noise: Construction Equipment (NC1)']:

return 'Street/Sidewalk'

elif complaint_type == 'Noise - House of Worship':

return 'House of Worship'

return 'Other'

Now pass the fill_in_location_type function to .apply() in order to update the location_type column.

noise_complaints['location_type'] = noise_complaints.apply(fill_in_location_type,axis=1)

Zip codes

I'm going to fill in the missing zip codes by sorting the dataset by borough, and then using Pandas DataFrame.fillna() to forward-fill the values, which fills in the missing value from the previous row in the data.

I sorted by borough so that missing zip codes would be more likely to be filled in by a row that also has the same borough.

First though, looking at the boroughs in the dataset, there are several rows that are 'Unspecified'.

noise_complaints.borough.value_counts()

MANHATTAN 23594

BROOKLYN 12256

QUEENS 8771

BRONX 3080

STATEN ISLAND 1994

Unspecified 172

Name: borough, dtype: int64

These rows also do not have zip code or other location data, so I am going to filter them out.

noise_complaints = noise_complaints[noise_complaints.borough != 'Unspecified']

Now sort by borough and fill in the missing values.

noise_complaints.sort_values(by='borough', inplace=True)

noise_complaints.fillna(method='ffill',inplace=True)

Feature engineering

Now that we've taken care of missing values in the data, it's time to look at the types of features we have and figure out how to best make use of them.

Continuous numerical features

Continuous features have continuous values from a range and are the most common type of feature.

- product prices

- map coordinates

- temperatures

In this dataset, the incident_zip feature isn't very useful as a number because you can't do calculations with zip codes by adding or subtracting them or anything like that.

But we could convert it into a numerical feature by replacing the zip code with a count of the number of complaints in that zip code.

If one complaint sample has a zip code 10031, and in the dataset there are 100 complaints with 10031 as the zip code, then 100 will be the value in those rows instead of 10031.

Then you have a continuous value with some meaning because more complaints in a zip code vs. fewer complaints seems like it could be useful information.

A groupby object on the incident_zip column with counts for each zipcode gives us this count data, and creating it in Pandas looks like this.

noise_complaints.groupby('incident_zip')['incident_zip'].count()

But instead of just creating the groupby object, I'm going to use it to create a column of these counts with the DataFrame.transform() function.

noise_complaints['zipcode_counts'] = noise_complaints.groupby('incident_zip')['incident_zip'].transform('count')

Then drop the original incident_zip column.

noise_complaints.drop(['incident_zip'],inplace=True,axis=1)

Transforming continuous features

The range of values in raw data can vary a lot, which can be a problem for some machine learning algorithms.

Continuous features often need to be scaled or normalized so that the values lie between some minimum and maximum value, like [0 to 1], or [-1 to 1].

Feature scaling could be a big discussion in itself - when to do it, and how to scale the features. I'm going to save most of that for another post, but I will just summarize a few things here.

In scikit-learn there are several options for scaling continuous features.

If we wanted to use MinMaxScaler to scale the values of zip code counts between 0 and 1.

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

noise_complaints['zipcode_counts'] = scaler.fit_transform(noise_complaints[['zipcode_counts']])

First I created an instance of MinMaxScaler, and then called .fit_transform() with the column values from the zipcode_counts column.

noise_complaints['zipcode_counts'].head()

24953 1.000000

12113 0.347469

33520 0.549248

33519 0.367305

33517 1.000000

Name: zipcode_counts, dtype: float64

You can see that the values have been scaled so that they lie between 0 and 1, which is the default range for this scaler.

Check out the docs, for more about how this scaler works.

Using the other scalers in scikit-learn involves a similar process of fitting and transforming the data.

You can also pass an entire DataFrame to the scaler and it will scale all of the columns.

Log transformation

Another way to transform the values is by taking the log of each value.

This is usually done on skewed data and helps to bring it to a normal distribution.

NumPy has a good implementation for this.

Categorical features

Categorical features have discrete values from a limited set, and there are several possibilities for these in the noise complaint data.

In the real world you've probably seen some examples of categorical data.

- A survey with a scale from 'totally disagree' to 'totally agree'.

- The rating system on many websites of 1-5 stars, where 5 is the highest.

- Book genres: fiction, autobiographical, science, cookbooks, etc.

The set can be ordinal or non-ordinal - a rating system has an order, while the list of book genres does not.

The agency_name feature from the noise complaint data is a good example that we will look at now.

The agency refers to the responding agency for different types of noise complaints.

When you submit a 311 complaint, it often gets routed to some other department to be handled - a well-known example is if you call 311 because of a loud party, the complaint gets routed to the police who then respond to it.;'''

noise_complaints.agency_name.unique()

array(['Department of Environmental Protection',

'New York City Police Department',

'Economic Development Corporation'], dtype=object)

In this dataset there are three departments that handle the noise complaints, so we have three categories.

- Department of Environmental Protection

- New York City Police Department

- Economic Development Corporation

The categories aren't very useful to a lot of machine learning algorithms while they are in string format like this, so we have to encode them and transform the data into something the algorithms can understand.

One-hot encoding

Categorical features must be converted to a numeric format, and a popular way to do this is one-hot encoding.

After encoding we will have a binary feature for each category to indicate the presence or absence of that category with 1 and 0 - these are called dummy variables.

We can do this in Pandas using the Pandas.get_dummies() function to encode the agency_name feature.

agency_dummies = pd.get_dummies(noise_complaints["agency_name"], prefix='agency')

And this is what the resulting columns look like.

Department of Environmental Protection Economic Development Corporation New York City Police Department

13152 1 0 0

14110 1 0 0

47065 1 0 0

14111 0 0 1

3404 1 0 0

Bring it together in one line to create the dummy columns from the agency_name feature, add them to the original dataframe noise_complaints, and drop the original agency_name column:

noise_complaints = pd.concat([noise_complaints,agency_dummies],axis=1).drop(['agency_name'],axis=1)

Label encoding

Another option is label encoding.

If the feature has a large number of classes, label them with numbers between 0 and n-1, where n is the number of classes

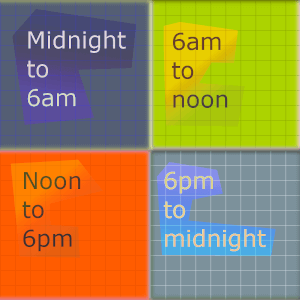

Date features

Dates are another type of feature that often need to be transformed in order for machine learning algorithms to be able to understand them.

- extract days, weeks, months, hours, etc

- days of the week or months of the year mapped to numbers

- Sunday = 0, Monday = 1, etc.

- January = 0, February = 1, etc.

- fiscal quarters

- time difference between dates

- period of the day - morning, afternoon, evening

- business hours

- seasons - Winter, Spring, Summer, Fall

- indicate if the date is a weekday or weekend

Elapsed time

For the noise complaint data, a date feature could be the time difference between the created_date and the closed_date.

This could be a target variable, from the beginning of the post, if you wanted to try to predict how long it would take to close out different complaints.

noise_complaints['complaint_duration'] = (noise_complaints['closed_date'] - noise_complaints['created_date']).dt.seconds

Here I've created a new feature complaint duration which is the time in seconds from when the complaint was created to when it was closed.

Days of the week

Another feature could be which day of the week it is.

Which day of the week a complaint was created.

noise_complaints['weekday'] = noise_complaints['created_date'].dt.weekday

Here the new feature weekday is the number 0-6 with 0=Monday.

Months of the year

Similar to the weekday feature, now we will have numbers for each month of the year.

noise_complaints['month'] = noise_complaints['created_date'].dt.month

With either the week or the month features, you could encode them as categorical variables like we did with the agency_name feature earlier.

Binning