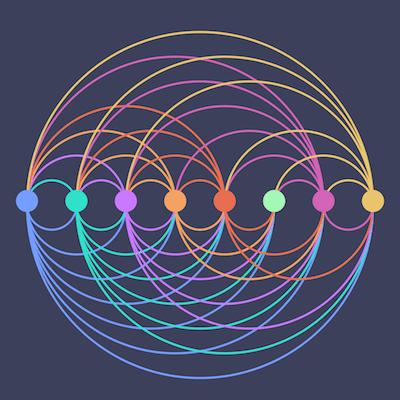

In an arc diagram, the nodes all lie along one axis, and the links between them are drawn as arcs.

Ride Hailing Apps in NYC

This arc diagram visualizes rides from the ride hailing apps Uber, Lyft, Via and Gett / Juno in NYC.

- Each ride starts in a borough or at an airport, and ends in a borough or at an airport.

- The nodes in this graph are the boroughs and airports, which are the circles in the middle of the diagram.

- The links connect two nodes, from the pickup location to the dropoff location.

- Link thickness is based on the count of rides between the two nodes, so you can see here that the link from Manhattan to Brooklyn is thicker than the link from Manhattan to the Bronx because there were more rides between them.

We will build this arc diagram in two parts.

Part One: Data Preparation

In this post I'm going to go over data collection and preparation using Python and Pandas.

There are three data sources that we need for this project, so we will be doing some data wrangling and ultimately end up with a dataset representing the nodes and links that will make up the graph.

Then we will output the data in JSON format and write it to a file.

Part Two: D3.js Visualization

In the next post, we will take the JSON data and actually create the arc diagram in D3.js.

Stay tuned for that!

Three Data Sources

We will be using three data sources for this project.

-

For Hire Vehicle trip data

- Each row in this dataset represents an individual ride.

- The full dataset has around 40 million rows.

-

Taxi zones

- NYC is divided up into numbered geographic zones, and they are used in the first dataset to indicate pickup and dropoff locations for a ride.

-

Base dispatch license numbers

- These indicate the company such as Uber or Lyft, and we are using them as a filter on the first dataset to only fetch the ride data for the ride-hailing apps.

For Hire Vehicle Trip Data

Uber, Lyft, and other apps fall under the For Hire Vehicle (FHV) umbrella, and there is data for these trips on NYC Open Data.

This dataset has approximately 41 million rows with one for each trip recorded, and about 20 million of them are for the big ride hailing apps that are covered in this visualization.

Aside from the ride hailing apps, there are a bunch of smaller dispatch companies that make up the rest of the 41 million rows.

It does not include yellow or green taxi trip data.

To create this graphic, I fetched all 20 million of the relevant rows from the API, but for this post, we will fetch a much smaller number.

There are six columns in this dataset

- Dispatching base license number

- Pickup datetime

- Dropoff datetime

- Pickup location ID - corresponds to a taxi zone.

- Dropoff location ID - also corresponds to a taxi zone.

- There is a shared ride flag for shared rides, which is a feature in some apps.

As mentioned, each company, e.g. Uber, has a base license number, which we will use to filter the data and only fetch rows corresponding to one of the apps from the API.

So we need the base license numbers for Uber, Lyft, Via, and Juno / Gett, which I will talk about in a bit.

The pickup and dropoff location IDs are integers, and they correspond to taxi zones.

Taxi Zones

Basically the city is divided into numbered zones that correspond to different geographic areas, and this data provides more information like the borough or airport name for each of the numbered zones.

In the FHV trip data, the pickup and dropoff location IDs correspond to a numbered taxi zone, so we will use the zone number as a key to map the borough or airport name back to the trip data.

The data for these zones can be found on NYC Open Data.

You can download it in CSV format and then load into a Pandas DataFrame.

zones = pd.read_csv(path_to_downloaded_file)

We are only concerned with three columns: LocationID, zone, and borough.

zones[['LocationID', 'zone', 'borough']].head()

LocationID zone borough

0 1 Newark Airport EWR

1 2 Jamaica Bay Queens

2 3 Allerton/Pelham Gardens Bronx

3 4 Alphabet City Manhattan

4 5 Arden Heights Staten Island

- The location ID is a number that represents a geographic area.

- Zones: you can see here one of the zones is Newark Airport, and there are zones for LaGuardia and JFK airports as well. The rest are NYC neighborhoods, which I am not concerned with for this visualization.

- The borough column is self explanatory - for Newark Airport you can see that they have EWR in that column (the airport code), but the other two airports are in Queens and have that as their borough.

Create a new column with Pandas apply()

I'm going to create a new column using the Pandas apply function that either has the borough name the zone is in, or the zone name if it is one of the airports.

def zone_location(row):

airports = ['JFK Airport', 'LaGuardia Airport', 'Newark Airport']

if row['zone'] in airports:

return row['zone']

return row['borough']

zones['borough_airport'] = zones.apply(zone_location,axis=1)

You can see that this new column has either the airport name or the borough name.

zones['borough_airport'].head()

0 Newark Airport

1 Queens

2 Bronx

3 Manhattan

4 Staten Island

Name: borough_airport, dtype: object

Mapping the borough or airport back to the FHV trip records

Later we will want to map this column to the pickup and dropoff location IDs in the FHV trip data, so that we have the borough or airport information for each ride's pickup and dropoff.

So I am going to create a Python dictionary with the location ID column as keys and the borough/airport as values.

zones_dict = dict(zip(zones.LocationID, zones.borough_airport))

We will come back to this after we've fetched the FHV trip data from NYC Open Data.

Base Dispatch License Numbers

As mentioned, we will be filtering the API request for FHV trip data by the base license numbers.

I found the data for the base license numbers for the various ride hailing apps in a PDF file.

uber = ['B02764','B02879','B02765','B02598','B02404','B02870','B02864','B02512','B02865','B02617','B02875','B02682','B02869','B02866', 'B02865', 'B02395','B02876', 'B02869', 'B02882', 'B02866', 'B02877', 'B02875', 'B02883', 'B02864', 'B02888', 'B02871', 'B02889', 'B02835', 'B02884', 'B02836', 'B02872', 'B02887', 'B02878', 'B02867', 'B02879', 'B02870', 'B02880']

via = ['B02800','B03136']

gett_juno = ['B02907', 'B02908', 'B02914','B03035']

lyft = ['B02510', 'B02844']

base_nums = []

for b in [uber,via,gett_juno,lyft]:

base_nums.extend(b)

I kept the companies separate just in case I want to do further analysis at some point, and then created a list for all of them in base_nums which we will use to fetch the trip data from NYC Open Data.

As mentioned earlier, we are going to use the list base_nums as a filter so that we only fetch the trip data for the ride hailing apps.

Fetching the FHV trip data

Now we are ready to fetch the trip records from the API, which we will do with Python requests and load it into a Pandas DataFrame.

First you need an API key.

import requests

NYC_OPEN_DATA_API_KEY='your_api_key_here'

Building a SoQL query with the IN operator

- Note, if you google SoQL you will probably find results about Salesforce, but the Socrata Query Language is also called SoQL and that is what we are using here.

We are only going to fetch the records with base license numbers in the base_nums list we compiled earlier.

To do this we will build a SoQL query using the IN operator which is similar to the same operator in SQL, and similar to functionality in Python where you check if an item is in a list.

Format the query string

First we have to format the list base_nums into a string that will create a valid API query.

def format_dispatch_base_num(base_nums):

base_num_str = '"{}",'*(len(base_nums)-1) + '"{}"'

base_num_string = base_num_str.format(*base_nums)

return base_num_string

dispatch_base_num = format_dispatch_base_num(base_nums)

Just pass in the base_nums list to the function, and the resulting string looks like this:

'"B02764","B02879","B02765","B02598","B02404","B02870","B02864","B02512","B02865","B02617","B02875","B02682","B02869","B02866","B02865","B02395","B02876","B02869","B02882","B02866","B02877","B02875","B02883","B02864","B02888","B02871","B02889","B02835","B02884","B02836","B02872","B02887","B02878","B02867","B02879","B02870","B02880","B02800","B03136","B02907","B02908","B02914","B03035","B02510","B02844"'

And then we will just use Python string formatting to pop that into the API request endpoint.

The column name for the taxi base numbers is dispatching_base_num.

endpoint = 'https://data.cityofnewyork.us/resource/u6nh-b56h.json?$where=dispatching_base_num IN({})&$limit=50000'.format(dispatch_base_num)

We're fetching 50,000 records in this post with $limit=50000, but feel free to tweak this to fetch the full 20 million-ish rows if you'd like!

And now we are ready to send the request to the API and load the JSON response into a DataFrame.

headers={'X-App-Token': NYC_OPEN_DATA_API_KEY}

result = requests.get(endpoint, headers=headers)

df = pd.DataFrame(result.json())

Look at the first few rows.

df.head()

dispatching_base_num dolocationid dropoff_datetime pickup_datetime pulocationid sr_flag

0 B02395 48 2019-01-01T00:48:41.000 2019-01-01T00:15:25.000 90 NaN

1 B02395 144 2019-01-01T01:16:07.000 2019-01-01T00:50:26.000 48 1

2 B02395 144 2019-01-01T01:16:07.000 2019-01-01T00:51:20.000 48 2

3 B02395 112 2019-01-01T00:23:34.000 2019-01-01T00:17:02.000 112 NaN

4 B02395 145 2019-01-01T00:37:54.000 2019-01-01T00:28:24.000 112 NaN

I'm going to drop any rows that have NaN values in the dispatching_base_num, dolocationid, or pulocationid columns.

df.dropna(subset=['dolocationid', 'pulocationid', 'dispatching_base_num'],inplace=True)

This is where the zones_dict from earlier comes in.

I'm going to create a couple of new columns for the pickup and dropoff locations that will hold the string value of either the borough or airport name from zones_dict from the corresponding location ID.

First convert the two location ID columns to type integer.

df['dolocationid'] = df['dolocationid'].astype('int')

df['pulocationid'] = df['pulocationid'].astype('int')

The new columns are named do_name and pu_name respectively, and we map the values from zones_dict to them based on the location ID.

df['do_name'] = df['dolocationid'].map(zones_dict)

df['pu_name'] = df['pulocationid'].map(zones_dict)

Grouping the data to prepare it for the arc diagram

We're going to do a groupby on the pu_name and do_name columns and get the count in each group, which is the number of rides.

df.groupby(['pu_name', 'do_name'])['pu_name'].count()

The first few groups look like this:

pu_name do_name

Bronx Bronx 8801

Brooklyn 103

JFK Airport 43

LaGuardia Airport 64

Manhattan 2177

Newark Airport 2

Queens 269

Staten Island 1

Brooklyn Bronx 61

Brooklyn 9030

JFK Airport 235

LaGuardia Airport 103

Manhattan 1296

Newark Airport 25

Queens 821

Staten Island 40

JFK Airport Bronx 21

Brooklyn 147

JFK Airport 7

LaGuardia Airport 19

Manhattan 272

Newark Airport 3

Queens 105

Staten Island 1

For each pickup-dropoff pair, we have a count of the number of rides.

Matrix graph representation

Let's use unstack to pivot the inner index do_name to columns, and just fill in any NaN values with 0.

df.groupby(['pu_name', 'do_name'])['pu_name'].count().unstack(fill_value=0)

do_name Bronx Brooklyn JFK Airport LaGuardia Airport Manhattan Newark Airport Queens Staten Island

pu_name

Bronx 8801 103 43 64 2177 2 269 1

Brooklyn 61 9030 235 103 1296 25 821 40

JFK Airport 21 147 7 19 272 3 105 1

LaGuardia Airport 17 55 4 2 192 1 50 0

Manhattan 1609 1007 416 390 11701 183 693 15

Queens 178 885 246 219 942 8 5761 2

Staten Island 1 25 3 0 9 3 2 233

This is our graph, represented as a matrix, that we will be visualizing as an arc diagram.

Pandas has a to_json method that we can use to easily convert the data and then write it to a file - check out the docs here.

We will also reset the index before generating the JSON.

Putting it all together

I used unstack just to show you the matrix, but we don't need to do that.

df_pu_counts = df.groupby(['pu_name', 'do_name'])['pu_name'].count().reset_index(name="count")

df_pu_counts.to_json(path_or_buf='taxi_data.json', orient="records")

There are a few ways to orient the JSON, which you can read about in the docs, but here we are using records to get the columns and their values in the format that we will use with D3.js.

We wrote the JSON data to a file with path_or_buf which you can just pass any file path to.

Here are a few of the JSON objects - I didn't copy and paste all of it here.

[

{"pu_name":"Bronx","do_name":"Bronx","count":8801},

{"pu_name":"Bronx","do_name":"Brooklyn","count":103},

{"pu_name":"Bronx","do_name":"JFK Airport","count":43}

...

]

These are the links in the graph - the link from pickup to dropoff and then the ride count, which will be used to set the thickness of the arcs.

Thanks for reading!

Stay tuned for Part II where we will actually create the arc diagram in D3.js.

Let me know if you have any questions or comments either in the comments below, or on Twitter @LVNGD.